Direction: Earthbound

(2024.12–ongoing)

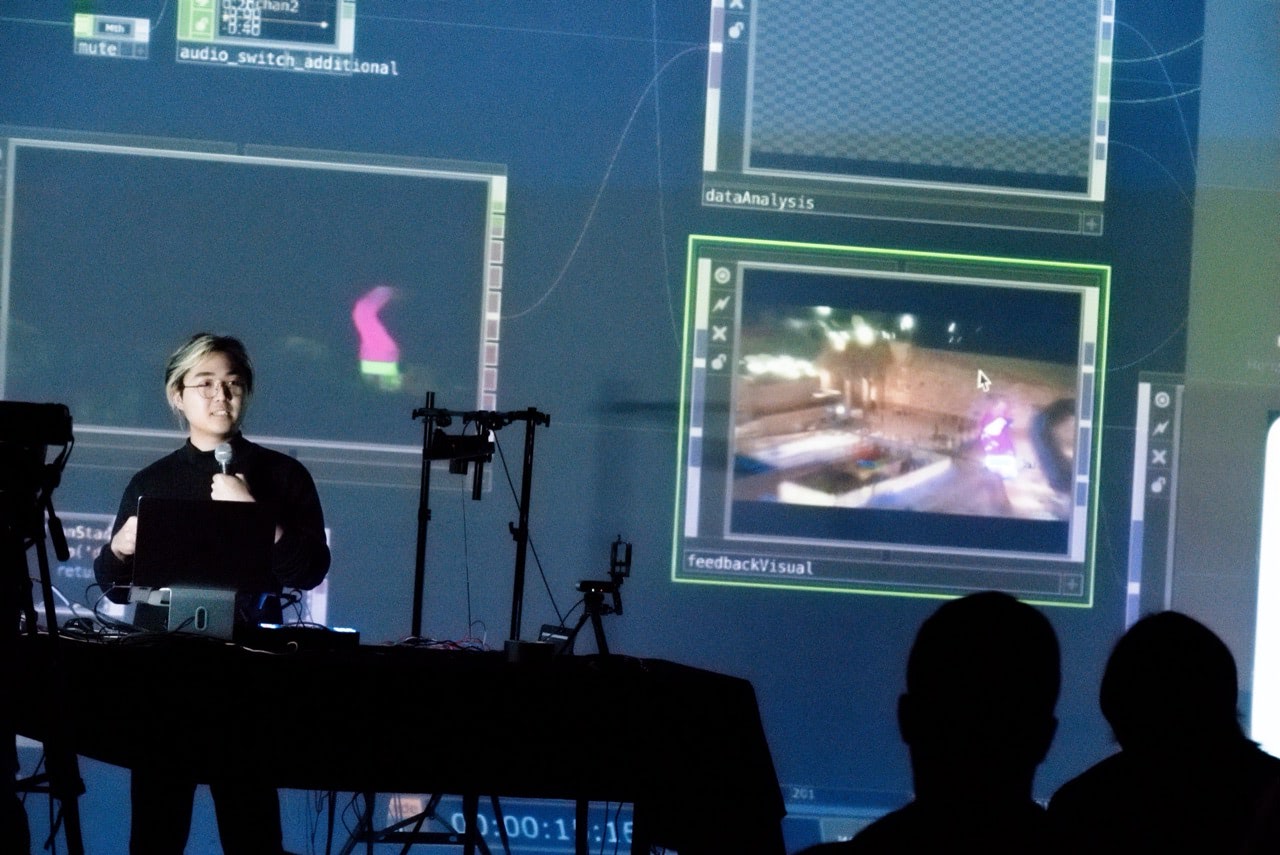

Direction: Earthbound is a multimedia performance and an artistic exploration harnessing live-streamed surveillance technology, examining the correlation between virtual and geographical distance. With a custom-built software system, the artist interprets the camera feeds as a narrative and expressive sequence, crafting an evolving landscape of visuals and sound in real time.

In this work, video feeds for surveillance are repurposed as materials for generating colors, patterns, and sounds. The artist builds an audiovisual instrument on top of the existing media and internet infrastructures, including the streaming platform (YouTube) and the security camera service (EarthCam). The underlying systemic structure converts active play and performance into a gesture of reclaiming digital spaces for the public, seeking sentimental moments among the infrastructures' original and utilitarian functionalities.

In the early days of the internet, utopian imaginations dominated the prospective future. Developments in technology are considered the basis towards a better society. As capitalism takes up cyber space, the dystopian counterpart argues to become our foreseeable future.

This work utilizes machines to transmit video and capture data, which is then interpreted and altered by the artist, questioning the existing perspective of technology on practicality and functionality, shifting towards more expressive potentials. During the performance, technology becomes invisible, while the performer and audience are under the spotlight. It looks at technology in an optimistic lens, as a tool fostering human connections.

We use and imagine coordinates in virtual space as a mapping of geographical locations, yet the notion of distance does not exist in the digital realm. McLuhan’s global village is long established and prosperous, and with a click we can get to virtually anywhere.

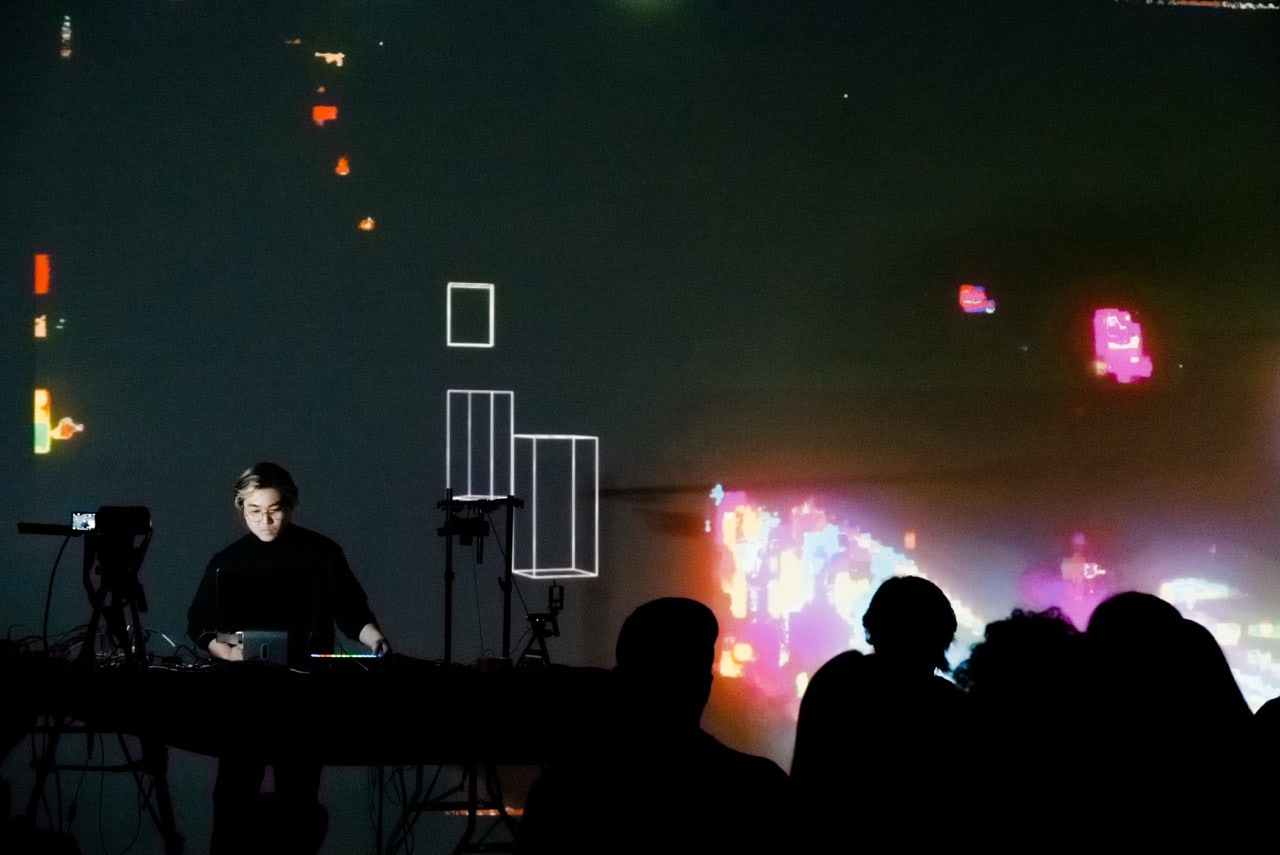

The artist takes on the overlooked distance, re-constructing a virtual globe in its full dimension. With a custom-built software system, the artist interprets the camera feeds as a narrative and expressive sequence, crafting an evolving landscape of visuals and sound in a realtime performance. Through various landscapes, light ambience, and presence of people, audiences are invited on a trip to imagine those distant perspectives, building an empathy rooted in and across physical distance.

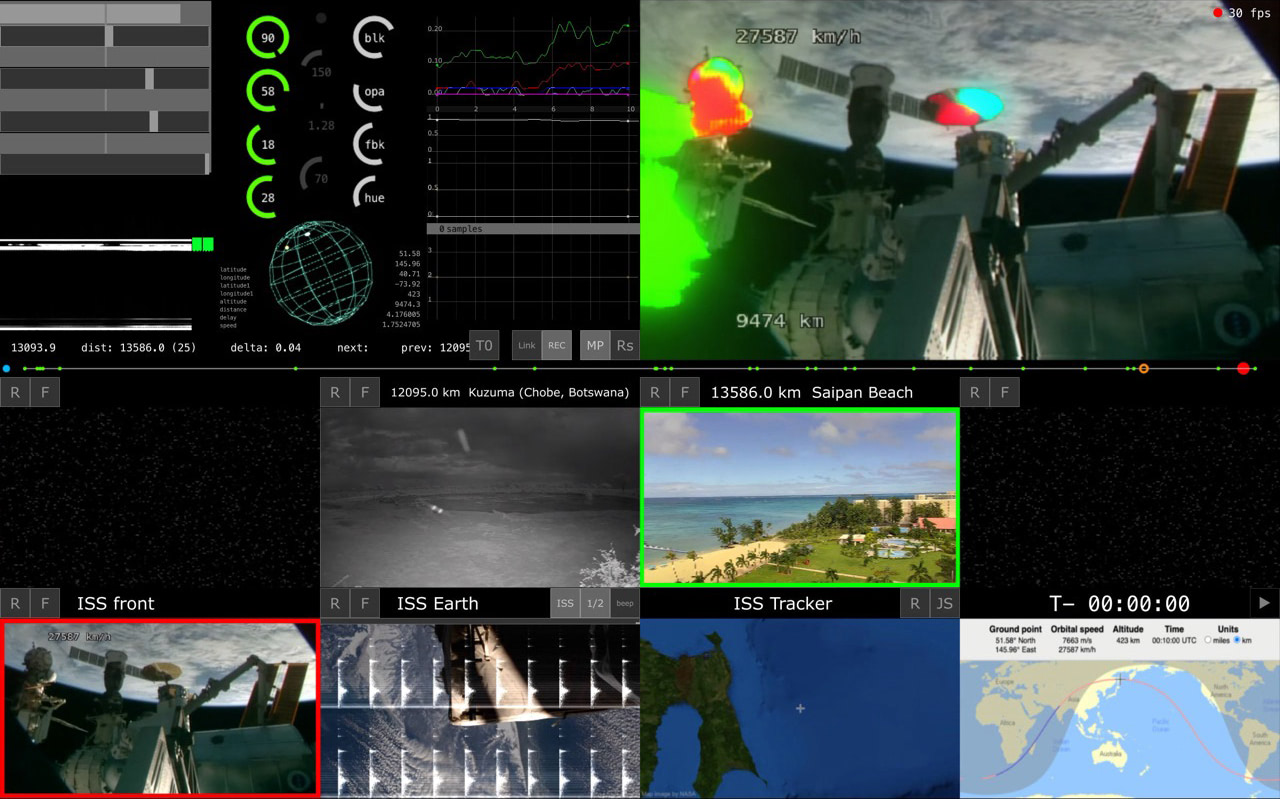

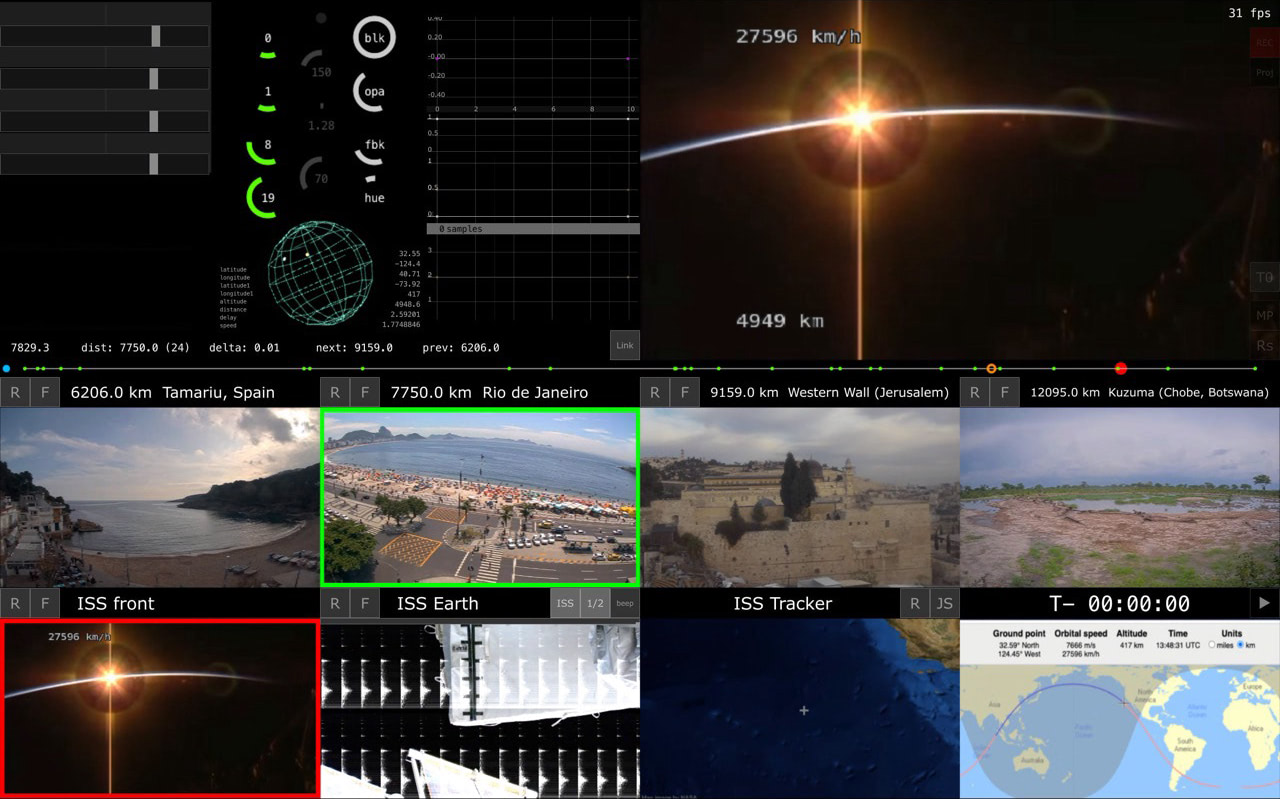

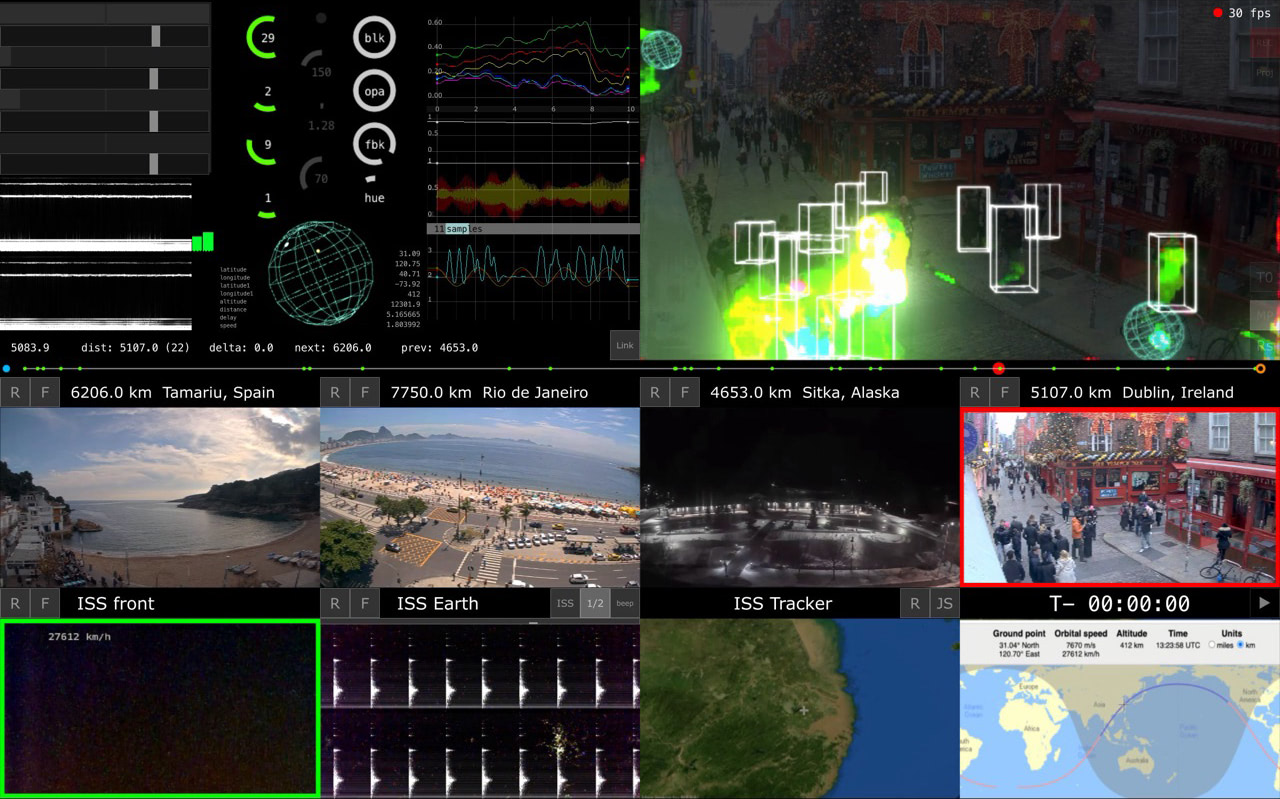

The performance is based on a custom-built software system in TouchDesigner, which consists of the following key components: 1) retrieving stream links, 2) obtaining geographical data, 3) sorting and switching, 4) analyzing video content, and 5) generating audio / visual overlays. A few other modules handle a) mapping of MIDI devices, and b) adding extra audio / visual effects.

Firstly, the system obtains a list of camera streams from the web (module 1), including the streaming link and the name. The second module (2) performs searches with Google Maps, obtaining the coordinates and calculating distances from the current location, which is used to sort video streams in a near-far order (module 3). Performer’s input provides an index to select and navigate the list of streams, incorporating a multi-camera view as a comprehensive monitoring and switching interface.

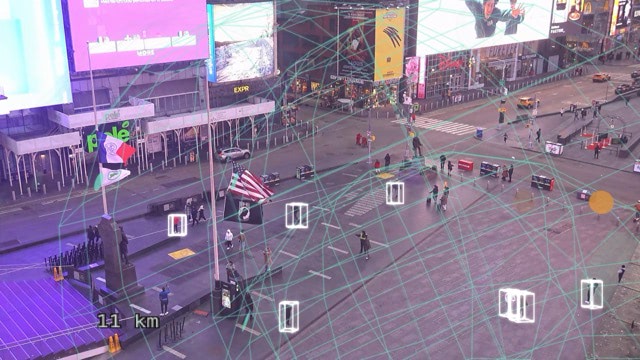

The program feed is re-routed through NDI Virtual Input as a webcam source, into the MediaPipe plugin for TouchDesigner, extracting data from the video (module 4), including object recognition and image segmentation. The last module (5) uses the object data to generate geometries, and places the segmented color layer into a feedback loop. The feedback layer is analyzed in color components and converted into oscillating frequencies. Data is translated into sound, which also indicates changes in raw and processed data. The translation process largely depends on the real-time input from the performer, drastically impacting the visuals and the generated audio, and is used creatively as an abstract narrative of storytelling.

Utilizing MediaPipe, a real-time machine learning model for computer vision, the video feeds are analyzed to recognize people and objects. In this system, two sets of data are retrieved from MediaPipe: x) object detection model outputs the identified category and coordinates on screen, and y) image segmentation model outputs a colormap of the areas that are identified as face, hair, skin, cloth, etc. The object data is used to generate geometric shapes, and the segmented colormap is used to create abstract colors and patterns from a video loopback.

The audio comprises two sets of oscillators – referencing Just Intonation and the Pythagorean Scale. By adjusting the ratio and the base frequencies, the emotions in the sound change dramatically.

In Direction: Earthbound, the artist operates an imaginary vehicle running on the track of distance, taking passengers flying outwards to imagine distant perspectives.

This multimedia performance harnesses live-streamed surveillance feeds and artificial intelligence to craft an evolving audiovisual landscape. As the perspective shifts outward, the work becomes increasingly fractured and distorted – a powerful metaphor for the paradoxical nature of digital connectivity.

As a final destination, the vehicle reaches space, with the perspective furthest from our everyday life. All the different places, times, sunrises and sunsets, all happen simultaneously on this tiny blue sphere wandering in the vast and empty cosmos. The distance on Earth becomes an arbitrary number, and beyond our differences in opinions and stances, we share the same feelings toward the Earth.